Google’s DeepMind and Google Cloud revealed a brand new software that can assist it to higher establish when AI-generated photos are being utilized, in keeping with an August 29 weblog publish.

SynthID, which is presently in beta, is geared toward curbing the unfold of misinformation by including an invisible, everlasting watermark to pictures to establish them as computer-generated. It’s presently out there to a restricted variety of Vertex AI prospects who’re utilizing Imagen, considered one of Google’s text-to-image turbines.

This invisible watermark is embedded instantly into the pixels of a picture created by Imagen and stays intact even when the picture undergoes modifications akin to filters or colour alterations.

Past simply including watermarks to pictures, SynthID employs a second method the place it will probably assess the probability of a picture being created by Imagen.

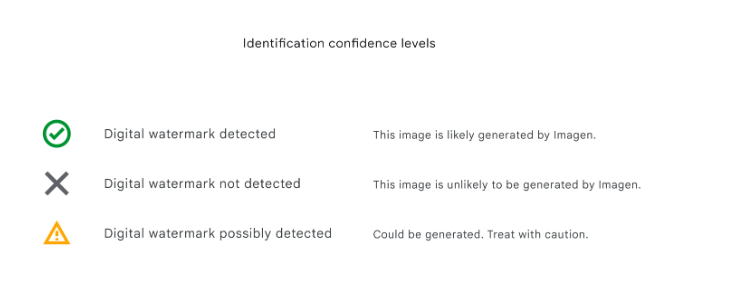

The AI software offers three “confidence” ranges for deciphering the outcomes of digital watermark identification:

- “Detected” – the picture is probably going generated by Imagen

- “Not Detected” – the picture is unlikely to be generated by Imagen

- “Possibly detected” – the picture might be generated by Imagen. Deal with with warning.

Within the weblog publish, Google talked about that whereas the expertise “isn’t perfect,” its inside software testing has proven accuracy towards widespread picture manipulations.

Attributable to developments in deepfake expertise, tech corporations are actively in search of methods to establish and flag manipulated content material, particularly when that content material operates to disrupt the social norm and create panic – such because the faux picture of the Pentagon being bombed.

The EU, after all, is already working to implement expertise via its EU Code of Apply on Disinformation that may acknowledge and label such a content material for customers spanning Google, Meta, Microsoft, TikTok, and different social media platforms. The Code is the primary self-regulatory piece of laws meant to inspire corporations to collaborate on options to combating misinformation. When it first was launched in 2018, 21 corporations had already agreed to decide to this Code.

Whereas Google has taken its distinctive method to addressing the problem, a consortium referred to as the Coalition for Content material Provenance and Authenticity (C2PA), backed by Adobe, has been a pacesetter in digital watermark efforts. Google beforehand launched the “About this image” software to supply customers details about the origins of photos discovered on its platform.

SynthID is simply one other next-gen technique by which we’re in a position to establish digital content material, performing as a kind of “upgrade” to how we establish a chunk of content material via its metadata. Since SynthID’s invisible watermark is embedded into a picture’s pixels, it’s suitable with these different picture identification strategies which might be primarily based on metadata and remains to be detectable even when that metadata is misplaced.

Nonetheless, with the speedy development of AI expertise, it stays unsure whether or not technical options like SynthID shall be utterly efficient in addressing the rising problem of misinformation.

Editor’s word: This text was written by an nft now workers member in collaboration with OpenAI’s GPT-4.